THETA: Triangulated Hand-State Estimation for Teleoperation and Automation in Robotic Hand Control

Published:

Tony Stark was right—teleoperation (the remote control of robotic devices) is truly the future.

Specifically, the demand for robotic devices that mimic the dexterity and manipulative abilities of our biggest blessing—our human hand and fingers—will appreciate incredibly in the upcoming years. But for now, the effectiveness of robotic teleoperation limited by several factors, including:

- The high costs and limitations of the current methods for teleoperation, like:

- High-end 4D motion-tracking camera systems like the Vicon Valkyrie VK26 system, which costs more than $10,000 per setup.

- Finger-tracking sensor gloves, like the Manus Prime X ($5,000+) and CyberGlove II ($10,000+) further increase expenses.

- Intel RealSense Depth Cameras (and Google MediaPipe landmark tracking) - $600+.

- This method is also prone to vision occlusion, where the camera cannot calculate the joint angles of a hand not directly facing the camera.

- As well as the difficult setups of these (and other) methods, highlighting the inconvenience for the average consumer.

THETA pipeline demo (GIF not playing?)

Experimental Design & Methodology

1. Robotic Hand Development & ROS2 Control

- The constructed hand and wrist mechanism was mostly taken from the DexHand CAD model by TheRobotStudio.

- The hand was comprised entirely of 3D-prints, fishing line, bearings, springs, mini servos, and screws.

- The phalanges, knuckle joints, and metacarpal bones fastened w/ 80-lb fishing line and 2mm springs.

- 3x Emax ES3352 12.4g mini servos and spring actuates each finger.

- 2x servos for abduction/adduction and finger base flexion.

- 1x servo for fingertip flexion.

- 1 spring for fingertip (distal) and base (proximal) extension.

Constructed & modified DexHand.

Fingertip flexion by pulling on ligament. Sprint (tip extension) circled.

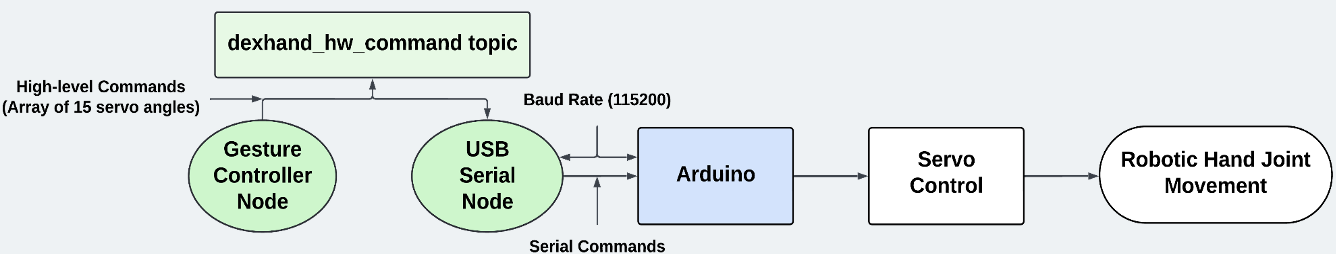

- To control the DexHand:

- Ubuntu VM w/ USB passthrough used as ROS2 environment.

- The Arduino pipeline relied on two main ROS2 nodes to faciliate robotic hand movement.

- High-level commands converted into serial messages which Arduino interprets to control robotic hand servos.

ROS 2-Arduino Joint Angle Transmission pipeline for robotic hand servos actuation.

2. Multi-View Data Collection & Annotation

- A gesture joint angles ground truth dataset was used to train the ML pipeline.

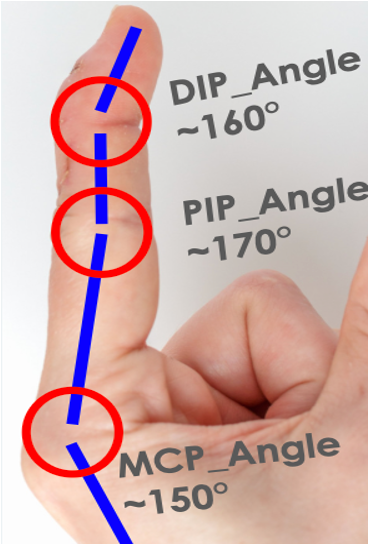

- 15 features (15 joint angles of fingers) across 40 distinct hand gestures were measured manually with a protractor. In each finger:

- Metacarpophalangeal (MCP) joint: flexion/extension, abduction/adduction at the knuckle.

- Proximal Interphalangeal (PIP) joint: mid-finger bending.

- Distal Interphalangeal (DIP) joint: fingertip actuation.

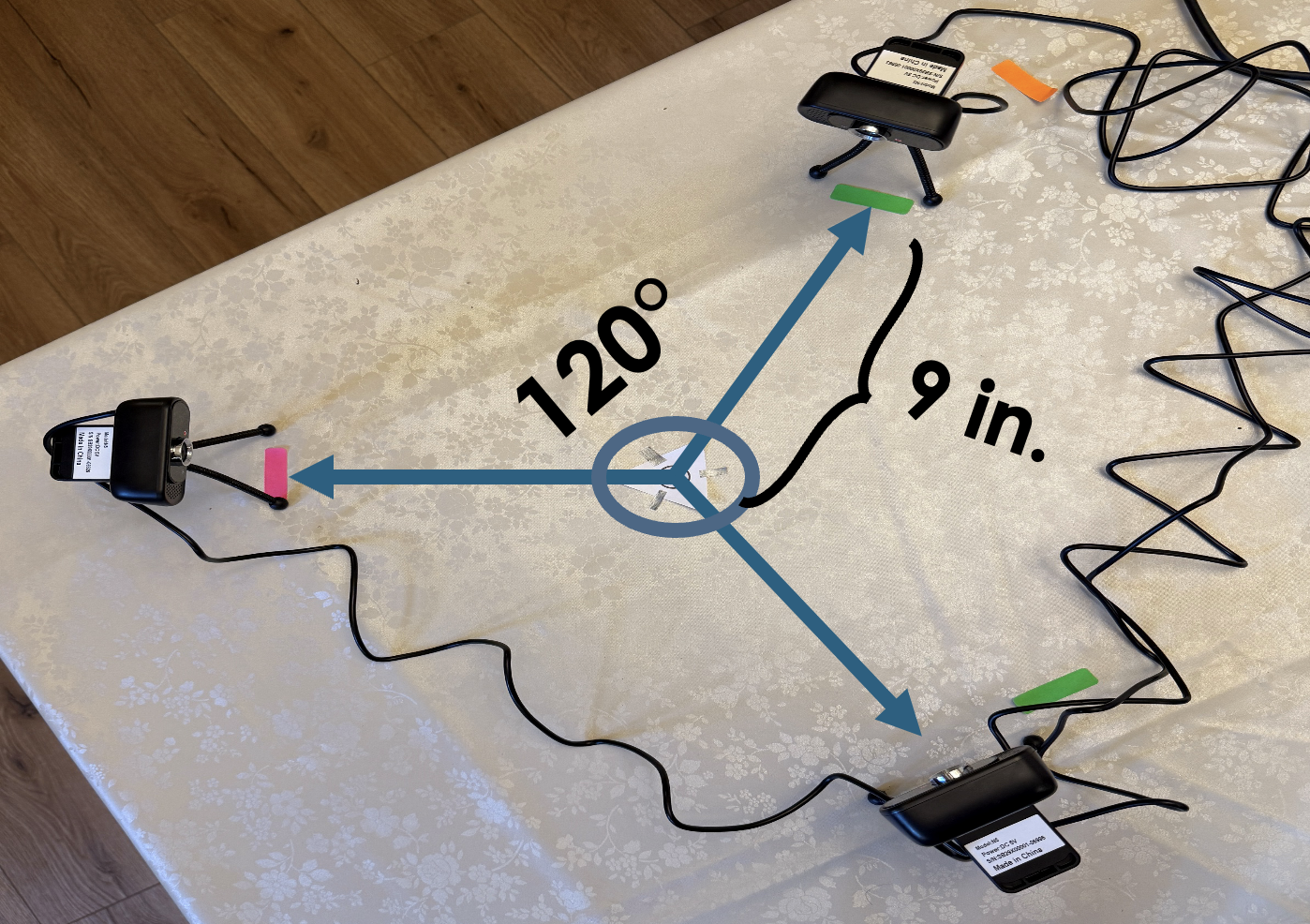

- RGB image capture (640x480p, 30FPS) from all three cameras (hence the triangulation) were synchronized while performing the selected hand gesture (see table).

- In total, more than 48,000 images were captured for the dataset (~1,200 images per gesture).

- The corresponding joint angles were recorded, with a ±5-degree error threshold was added to account for any human error in the data collection process.

Gesture ID Gesture Name Index MCP Angle Index PIP Angle Index DIP Angle Middle MCP Angle 1 Closed Fist 90 (±5°) 90 (±5°) 110 (±5°) 90 (±5°) 2 Open Palm 180 (±5°) 180 (±5°) 180 (±5°) 180 (±5°) 3 Number One 180 (±5°) 180 (±5°) 180 (±5°) 90 (±5°) Example entries from dataset.

- 15 features (15 joint angles of fingers) across 40 distinct hand gestures were measured manually with a protractor. In each finger:

Joint data collection diagram.

Triangulation (multi-view) data collection setup.

Triangulated, synchronized RGB images captured from webcams (right, left, and front).

- This same triangulation setup (see specific measurements above) is how the pipeline works in real-time inference.

- The hand input will be “scanned” by the three cameras simultaneously, and the 15x1 angle vector that is outputted from the CNN model will be fed into the robotic hand, enabling real-time teleoperation.

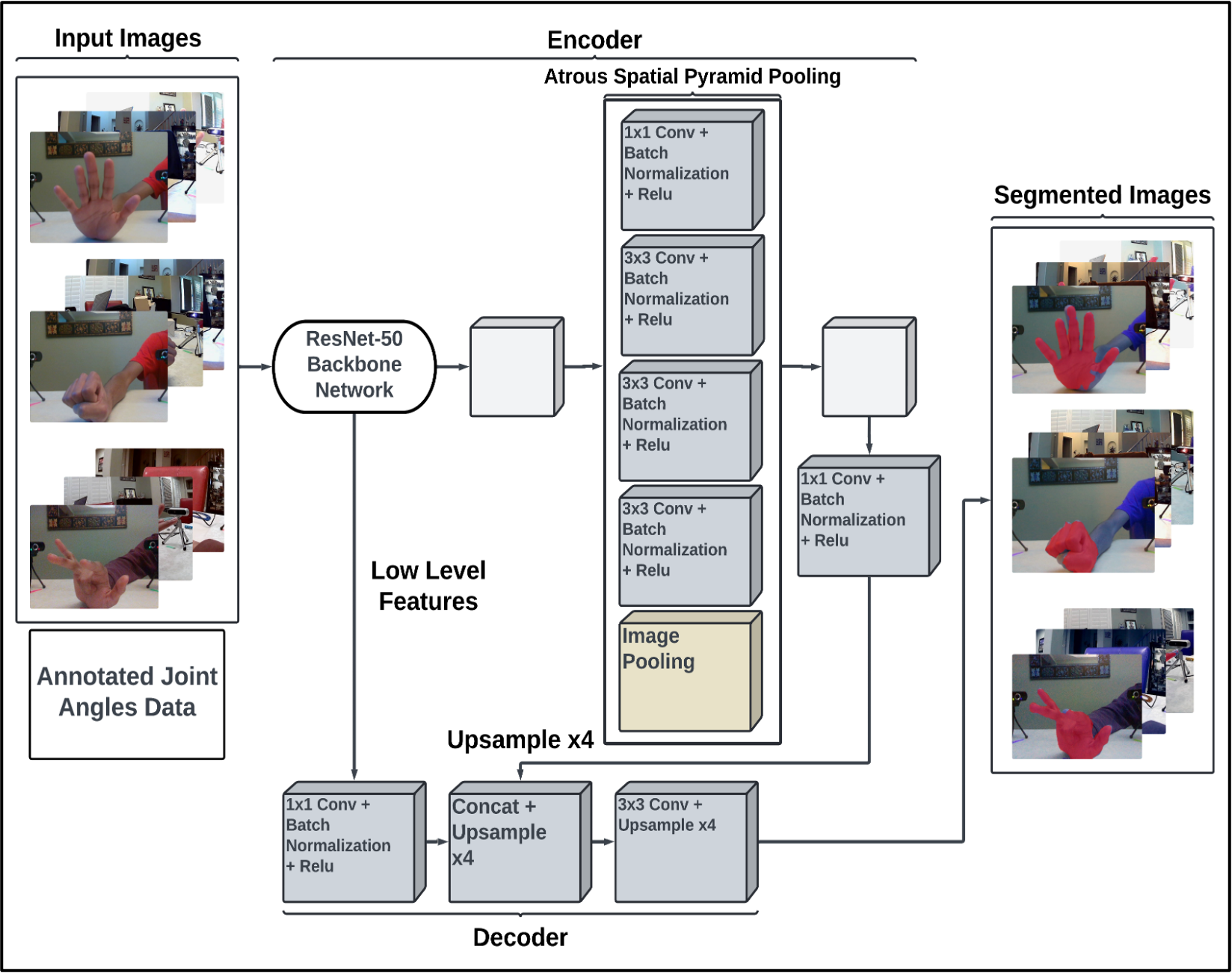

3. Segmentation Preprocessing & THETA Joint Angle Classification

- The images are first segmented (hand is cut out from the background) through the following process:

- Images are preprocessed:

- Resized to 224×224 to reduce size (Input tensor is a 224×224×3 vector).

- Pixel values are normalized.

- Image features are extracted, linked to angle vectors:

- Image passed through DeepLabV3 with the ResNet-50 backbone.

- DeepLabV3 is a semantic segmentation network, which classifies every pixel in an image as a category (like “dog”, “sky”, etc).

- ResNet-50 is a (lightweight) pretrained deep residual network with 50 layers (hence the name), which processes the input image to produce feature mappings to that image’s corresponding angle vectors.

- Atrous-Spatial Pyramid Pooling is applied to extract features between multiple layers/pixels.

- Curious why we chose DeepLabV3 with ResNet-50?

- Image passed through DeepLabV3 with the ResNet-50 backbone.

- Segmentation of the hand is predicted:

- Masks (region of hand) optimized with BCEWithLogitsLoss

- Erosion/dilation image touchups added for the removal of noise.

- The hand is segmented in red! (All the red in the original RGB images are masked over with blue to prevent unintended red masks in the images)

- Images are preprocessed:

End-to-end segmentation pipeline.

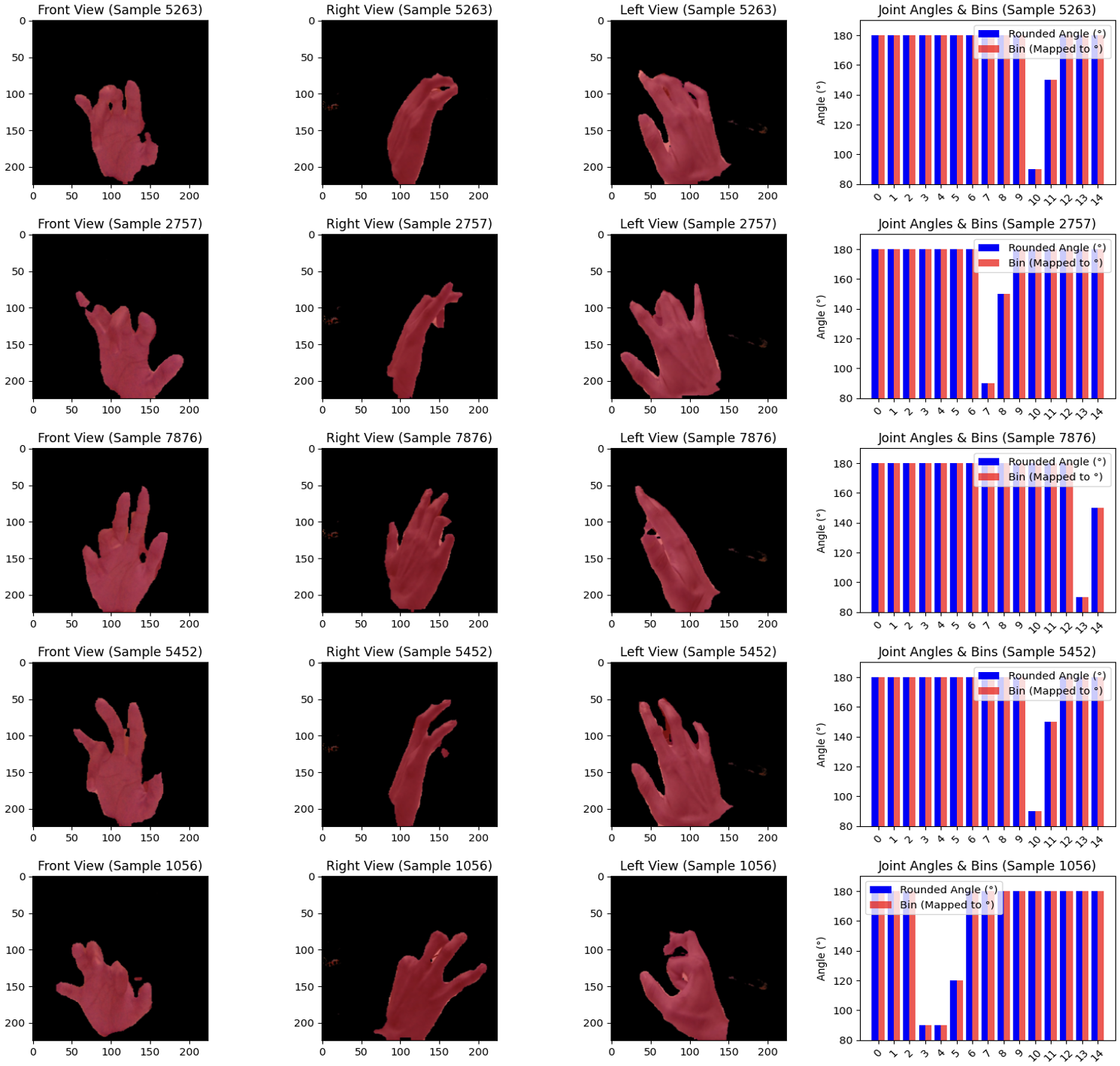

Red masks segmentating the hand, across triangulated views.

4. THETA Joint Angle Prediction, Real-Time Inference, & Results

- After segmentation, the masked images are HSV processed (background = black, hand = red), and passed through a convolutional neural network (CNN), MobileNetV2.

- During model training:

- Joint angles are binned 1-9, where:

- 1 corresponds to 80-90 degrees (our defined threshold of the smallest angle a finger joint is able to make)

- 2 corresponds to 90-100 degrees… etc

- 9 corresponds to 170-180 degrees (our defined threshold of the largest angle a finger joint is able to make)

- Input data (multi-view HSV images) are classified into one of the 9 bins

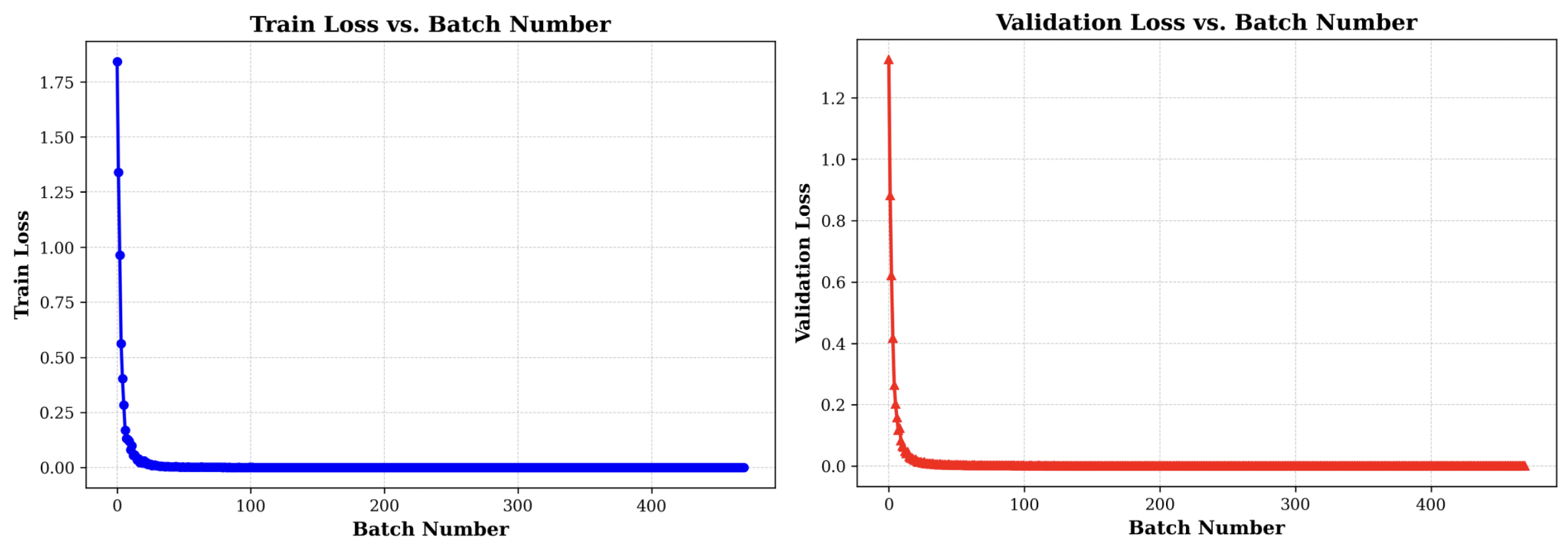

- Training is optimized:

- Focal Loss (γ = 2.0)

- Adam (LR = 0.001)

- Trained on 10 epochs.

- Last layer is modified:

- Reshaped to (batch, 15, 10)

- T = 2.0 scaling

- Softmax

- Joint angles are binned 1-9, where:

- During model training:

MobileNetV2 Inputs: 224×224×3 HSV image, angle vectors.

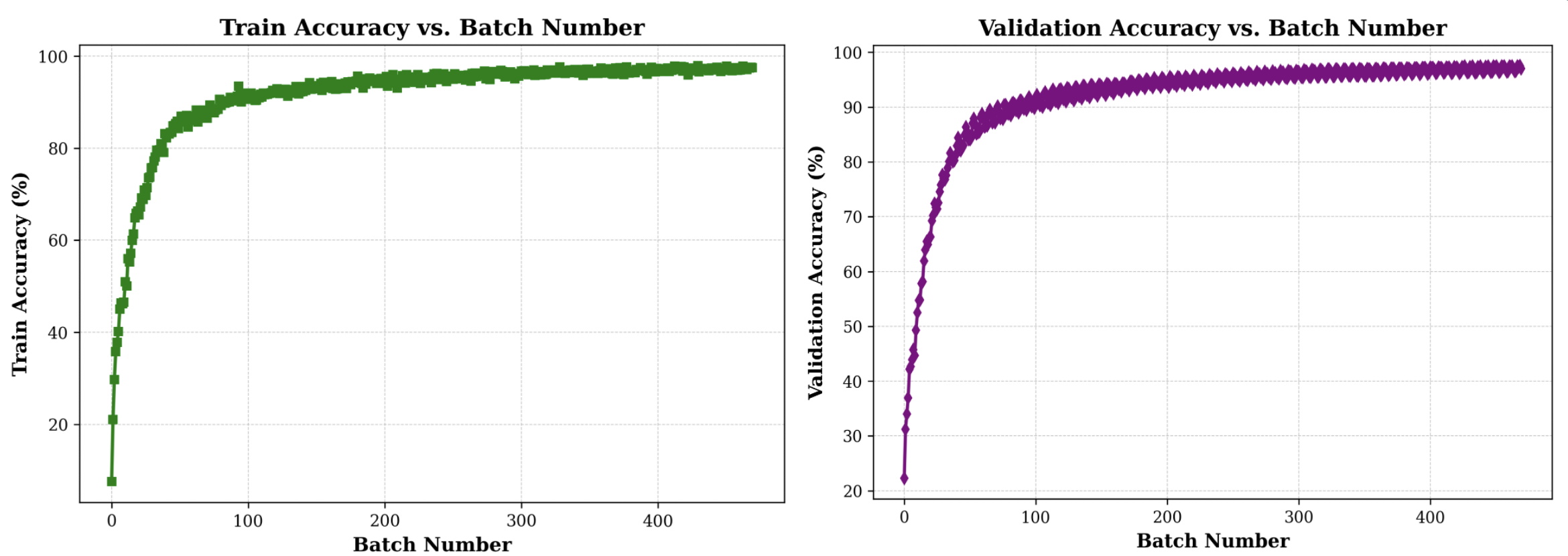

High training and validation accuracy with loss convergence to 0.0001, demonstrating strong generalization, minimal overfitting, and reliable joint angle classification.

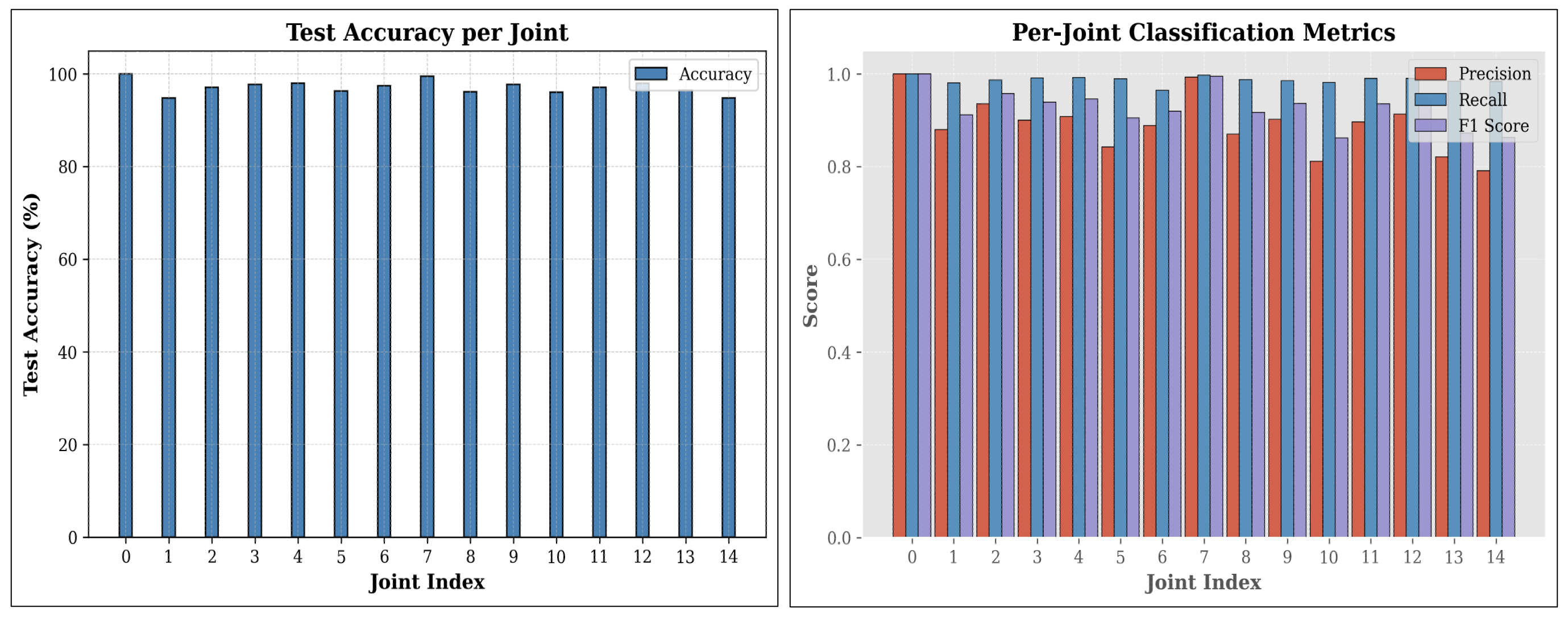

- Overall, the CNN network was fairly accurate, with a 97.18% accuracy on the testing set, demonstrating high potential for strong generalization in predicting joint angles given multi-view images.

- Other important metrics:

- F1 score: 0.9274

- Precision: 0.8906

- Recall: 0.9872

- Other important metrics:

Important metrics for MobileNetV2 model.

THETA pipeline demo (GIF not playing?)

Conclusion

THETA’s simple setup and robustness has the potential to increase the accessibility of high-compliant teleoperated robotic hands, with implications for countless real-life fields, such as:

- Household prosthetics - improve automation and AI functionalities, especially for those with disabilities or difficult quality-of-life.

- Linguistics - facilitate remote/automated sign language interpretation and gestures, like ASL (American Sign Language)

- Medical field - support remote surgical procedures with precise joint angle control systems (once our robotic hands become built with industry standard!)

- Inaccessible exploration - enable dexterous object manipulation during space missions or rescue missions in inaccessible places.

- Manufacturing & agriculture - automate precise, perfect grasping and manipulation of consumer goods.

What’s next?

As with any good project, there is future research planned:

- Develop adaptive learning models that continuously refine and enhance joint angle recognition through weighted user feedback.

- Optimize deep learning pipelines to minimize latency and boost real-time responsiveness of physical robotic hand.

- Integrate LLM reasoning, logic, and image capabilities to enhance compliance and awareness for situational contexts.

Click here for full PDF Version of the official 48x48 ISEF poster

Quick Video Summary

Video submission for participation in the 2025 International Science and Engineering Fair...