VLA-Replica: A Replicable Benchmark for Evaluating Robotics Vision-Language Action Models with the SO-101 Robotic Gripper

Published:

Current Progress

This project aims to explore how we can improve the generalization ability of policies for robotic devices like the Kochv1.1 or SO-101, both grippers optimized for object grasping and manipulation.

10-1-25: Installed LeRobot, conda, and necesary packages for calibration and motor setup.

10-8-25: Successfully set up teleoperation and cable management for Koch v1.1 bimanual grippers.

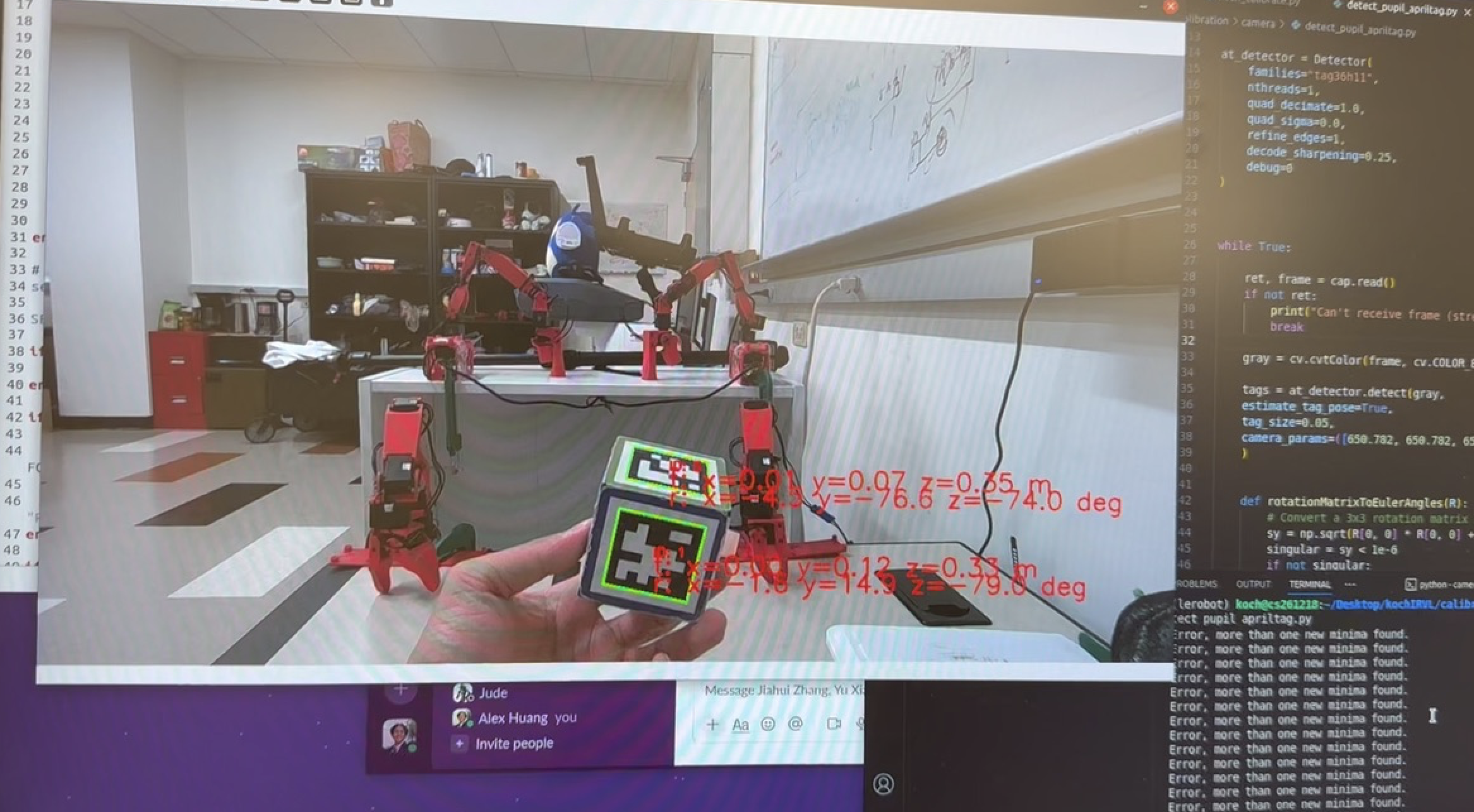

10-17-25: Working on implementing OpenCV for AprilTag detection (transformation matrices determination) first, and then image collection for the imitation learning.

AprilTag Distance Estimation.

11-9-25: Set up teleoperation and cable management for SO-101 robotic gripper. It’s a lot more powerful than the Koch, and has wider range of motion for more complex tasks!

Current setup for data collection.

11-15-25: Submitted URSA 2025 proposal.

12-1-25: Submitted optimal, suboptimal, and failure trajectories to the Reward-FM dataset (to see dataset, scroll down to aliangdw/utd_so101_policy_ranking).

Quick Video Summary

Regular updates will be posted here.